Top Metrics for Measuring Success in AI-Generated Responses

Over the decades, we have somehow come to know the term Generative AI, which has been gaining a lot of attention in the real world. It has been a great tool to automate customer support interactions, generate creative marketing campaigns, accelerate research, and assist in software development. With the help of this genius, too, businesses are changing the way they operate, innovate, and scale. What used to appear to have future possibility now is daily reality, when AI applications are integrated into processes throughout medicine, finance, retail, manufacturing, and beyond. Greater customisation, higher efficiency, and totally new paths for expansion are the great possibilities.

📑 Table of Contents

- Top Metrics for Measuring Success in AI-Generated Responses

- The importance of KPIs for Generative AI

- Core categories of AI success Top Metrics

- Advanced KPI frameworks for generative AI

- Measuring AI in the real world

- New Metrics for the AI search era

- Best practices for measuring AI success

- Challenges in generative AI

- Future of GenAI measurement

- Conclusion

- Frequently Asked Questions (FAQs)

Still, one cannot take success with generative AI for granted, even if there is anticipation. Not every technological adoption results in a noticeable business impact, history has shown. You cannot, as the proverb goes, handle what you do not measure. Particularly for artificial intelligence, this concept is especially true as judging the quality and influence of outputs can be challenging. Measuring generative artificial intelligence projects is essential to find areas needing improvement and which utilization as well as to ensure they produce real results. The best return on investment (ROI) comes from cases.

Many businesses become caught in the trap of “AI for AI’s sake” if there is no well-defined measurement system. While they create stunning models that produce humanlike text, images, or insights, they fall short in linking these results to the company’s worth. Often, wasted resources, frozen adoption, and rising stakeholder doubts on whether artificial intelligence really moves the needle are among the outcomes.

Many businesses stumble on the tendency to concentrate only on technical measures like accuracy, latency, or model performance standards. Though these actions have value, they only provide half of the story. A chatbot, for instance, might have close to perfect response accuracy, but if it doesn’t lower support expenses, boost consumer happiness, or raise retention, then the value to the firm remains constrained. Likewise, if adoption across teams is low or if it does not fuel revenue growth, a model that runs with lightning-fast inference speed may nevertheless be regarded as a failure.

The importance of KPIs for Generative AI

Assuring that generative AI projects produce significant commercial effects as well as highlighting amazing technology depends on key performance indicators (KPIs). Organisations that establish the appropriate KPIs early on may match artificial intelligence projects to more general strategic objectives, measure objective progress, and show stakeholders obvious worth. AI initiatives risk being isolated experiments that never go beyond proof of concept unless this alignment is present.

Bridging the gap between technological performance and business results is one of the most important functions of KPIs. When assessing confined issues such as classification or search optimization, conventional AI measures like precision, recall, and accuracy work well. Generative artificial intelligence is not the same, though. Often, subjective and unbounded are its results. Ans: long-form text, images, code, or synthetic data.

Measuring generative artificial intelligence, meanwhile, offers particular difficulties. Subjectivity dominates what one assessor deems excellent quality, ty another could view as lacking originality or complexity. Another worry is bias; if not properly watched, generative models may unintentionally perpetuate stereotypes or incorrect information. Lastly, the return on investment calculation is sometimes intricate. Benefits like increased productivity, better consumer interaction, or speed innovation might take months or even years to fully appear, making it challenging to compute near-term financial benefits.

Notwithstanding these obstacles, organizations serious about growing their use of artificial intelligence have nonnegotiable targets using unambiguous KPIs. The appropriate measurement framework enables teams to prioritize expenditures, find underperforming use cases, and constantly improve their models and procedures. Companies may guarantee that generative AI projects long-term competitive benefit and operational efficiency by going beyond superficial technical indicators and embracing a thorough KPI plan.

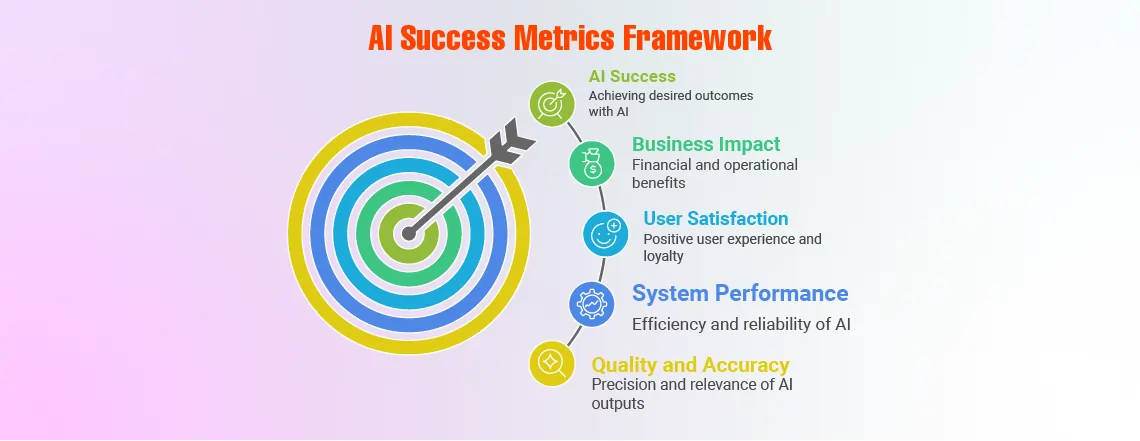

Core categories of AI success Top Metrics

Measuring generative AI calls organizations to adopt a balanced approach that assesses user satisfaction and business effect in addition to technical performance. Four primary categories of metrics that collectively paint a whole view of artificial intelligence success are listed below.

Measurement of Quality and Accuracy

Often, the first criteria firms consider when assessing generative artificial intelligence are accuracy and quality. These measures aid in deciding whether the AI is generating outputs that meet expectations. Answerr Measures how frequently the artificial intelligence gives accurate or factually based replies. A banking chatbot, for instance, should consistently give precise transaction guidance or account information.

Relevance moves beyond correctness to judge if answers match the user’s purpose. Even if the answer is technically correct, a customer asking about “delivery times” should not be answered about “refunds.”

Hallucination Detection: Generative AI is well known for producing confident yet inaccurate information. Monitoring hallucination rates helps teams spot instances when models deviate from verifiable facts.

Measures the frequency with which artificial intelligence accomplishes a job without human assistance, as in making a reservation or handling a refund.

Users want artificial intelligence to provide trustworthy answers across comparable prompts; hence, consistency and trust are essential. Erratic outcomes can erode trust and thereby lower adoption.

Metrics for System Performance and Efficiency

Even the most intelligent artificial intelligence loses worth if it is too sluggish or erratic. Performance and efficiency KPIs emphasize the system’s capacity to function on a large scale.

Measures of how quickly the artificial intelligence produces answers are known as inference time (latency). Slow replies in customer-facing situations could anger consumers and lower happiness.

Misunderstanding Rate: Tracks technical problems, including crashes, incomplete responses, or incorrect results.

Scalability measures the system’s capacity for rising needs. An e-commerce artificial intelligence, for example, has to keep pace and be accurate during the holidays.

Uptime and reliability measure the percentage of time the artificial intelligence is available and operating as intended. Customer confidence and income can be directly influenced by downtime.

These measures draw attention to the resilience of an artificial intelligence system for company-scale deployment.

Metric-Based User

AI has to eventually satisfy its consumers’ demands. Usercentric statistics reflect the human aspects of performance.

Surveys or feedback ratings assessing general pleasure following engagement with artificial intelligence are Customer Satisfaction (CSAT).

Net Promoter Score (NPS) gauges customer loyalty and the probability that others will recommend the AI-powered service.

First Contact Resolution (FCR) assesses whether a problem is fixed throughout the first AI contact without elevation. A high FCR suggests good automation.

Tracks how often a human takeover is necessary for artificial intelligence interactions. Generally speaking, a lesser rate of escalation indicates improved AI efficiency and usability.

These KPIs guarantee that artificial intelligence is giving a good, interesting experience as well as being practical.

Indicators of Business Impact

The ultimate test of any artificial intelligence effort is whether it produces verifiable commercial benefit. Savings in costs come from lower operational expenses, such as less support staff needed, thanks to AI chatbots. Time saved by staff when artificial intelligence manages monotonous activities helps people to concentrate on higher-value work, hence increasing productivity.

How well artificial intelligence interactions result in registrations or purchases for sales or marketing applications is referred to as the conversion rate.

Revenue Growth: The Direct effect of artificial intelligence on boosting company revenue, as with upselling suggestions, pushing up average order values.

Critical for justifying investments and scaling artificial intelligence solutions, business impact measures turn technical performance into financial language.

Advanced KPI frameworks for generative AI

Beyond basic indicators, businesses require sophisticated KPI frameworks to assess generative artificial intelligence comprehensively. These models address financial impact, system dependability, adoption, and model quality.

Model quality KPIs go beyond accuracy and recall. Because GenAI outputs are open-ended, evaluation typically combines human review with automated scoring by big language models to gauge relevancy, creativity, and factuality.

System Quality KPIs follow the production performance of artificial intelligence. Important metrics are deployment scale (pipelines and models in use), throughput, latency, and monitoring for drift or security hazards. These guarantee that artificial intelligence stays quick, dependable, and secure.

Business Operational KPIs evaluated domain-specific effectiveness. For instance, client service artificial intelligence might shorten resolution times; retail apps raise cart size or recommendation accuracy. In sectors like insurance, artificial intelligence may assess the time saved in document processing.

Tracking usage frequency, adoption rates, and qualitative comments, adoption KPIs assess user engagement. Strong adoption shows that artificial intelligence is really adding value to processes.

Measuring AI in the real world

For example, a food delivery firm is using a generative AI chatbot to manage customer care. Mainly meant to answer order-related questions, settle complaints, and lessen dependency on human agents, the chatbot serves this purpose.

The business monitors several key metrics to assess achievement. The frequency with which the chatbot offers accurate order status, refund, or promotional information determines its performance on the accuracy side. Most interactions, like cancelling an order or modifying delivery instructions, are handled without human intervention, thanks in part to the task completion rate.

Usercentric key performance indicators like first contact resolution (FCR) and consumer satisfaction (CSAT) expose the degree of the chatbot’s ability to match consumer expectations. Fewer problems call for human takeover, therefore boosting efficiency, so a fall in escalation rates implies fewer difficulties.

The company calculates cost savings on the business impact side from lower agent hours and productivity gains from faster query handling. They monitor too if better assistance results in more repeat orders and greater customer retention.

Finally, the team assesses return on investment by matching the savings and revenue increase provided by the chatbot with its development and maintenance costs. The corporation gets a comprehensive view of the effects of artificial intelligence in reality by combining user experience, accuracy, and corporate value measures.

New Metrics for the AI search era

Old SEO indicators like clicks and page views are insufficient as AI-driven search engines and assistants change how people access information. In this changing environment, companies have to embrace fresh means of assessing exposure and interaction.

One critical measure is brand mentions in AI-generated replies. Strong visibility in a world where solutions frequently take the place of links is shown when conversational AI tools or search assistants reference or suggest a brand. Closely connected with this is AI search impression share, which monitors how often a brand appears within AI-curated responses relative to rivals.

Answer ranking is equally important. Being shown prominently, or even solely, in an artificial intelligence’s produced answer can drive undue impact, just as high Google results do.

Apart from visibility, companies should evaluate interaction outside of clicks. AI-driven recommendations, for example, may start social interactions, brand mentions, or user sentiment changes that conventional analytics miss.

Last of all, assisted attribution takes on importance. Indirectly impacting customer journeys are AI interactions, even if the buy takes place afterwards via a different channel. Tracking how exposure to artificial intelligence helps to uncover this unseen value by showing how it influences ultimate conversions.

These new KPIs help businesses to modify their plans as AI-powered answers replace link-based discovery.

Best practices for measuring AI success

Because AI-driven search engines and assistants alter how consumers get information, traditional SEO measures like clicks and page views fall short. Businesses have to embrace new approaches to assessing exposure and interaction in this changing setting.

Brand mentions in artificial intelligence-generated replies constitute one crucial statistic. Strong visibility in a society where solutions often replace links is shown when conversational AI tools or search assistants reference or suggest a brand. Closely related to this is AI search impression share, which monitors how frequently a brand appears within AI-curated responses relative to rivals.

An equally vital component is answer ranking. Like high Google results, being displayed prominently, or even only, in the created response of an artificial intelligence can cause inappropriate influence.

Companies should assess engagement outside of clicks in addition to visibility. AI-driven suggestions can begin with brand mentions, social interactions, or user sentiment shifts that traditional analytics overlook.

Finally, assisted attribution becomes relevant. Although the purchase happens later via a different channel, artificial intelligence interactions indirectly affect client travel. Tracking how exposure to artificial intelligence helps to reveal this hidden worth by showing how it affects ultimate conversions.

These new key performance indicators support companies in changing their strategies as link-based exploration gives way to AI-powered solutions.

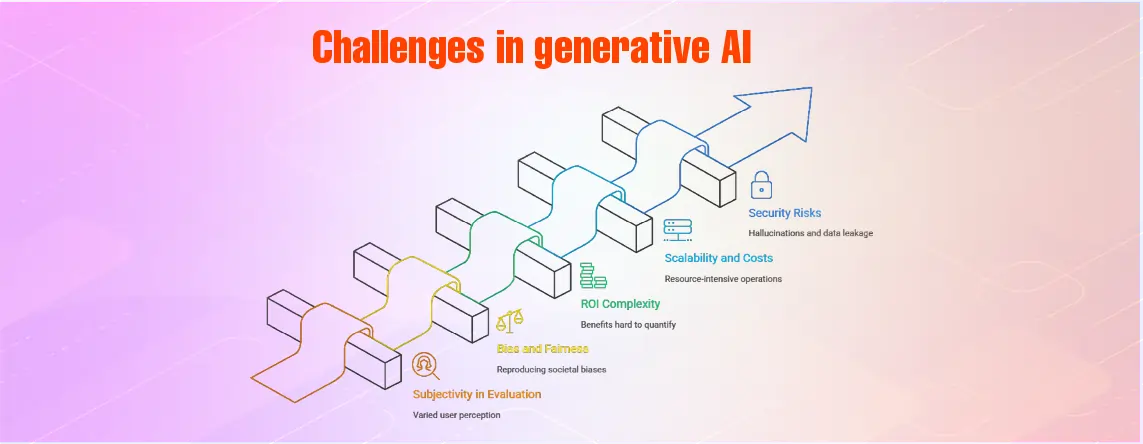

Challenges in generative AI

Although generative AI has lots of potential, it can achieve this, but it is not free from some of the drawbacks, which are as follows:

Subjectivity in Evaluation

Unlike traditional AI, GenAI outputs are often open-ended. What one user sees as helpful, another may find irrelevant. To overcome this, combine quantitative measures (accuracy, task completion) with human reviews and user feedback to capture nuance.

Bias and Fairness

Generative models can inadvertently reproduce or amplify societal biases present in training data. Left unchecked, this can damage customer trust. Organizations should implement bias detection tools, regularly audit outputs, and diversify training datasets.

ROI Complexity Ans

Calculating ROI is difficult because benefits like productivity gains, better customer engagement, or innovation may not show immediate financial returns. A solution is to measure both short-term operational savings and long-term business impact, providing a balanced view of value creation.

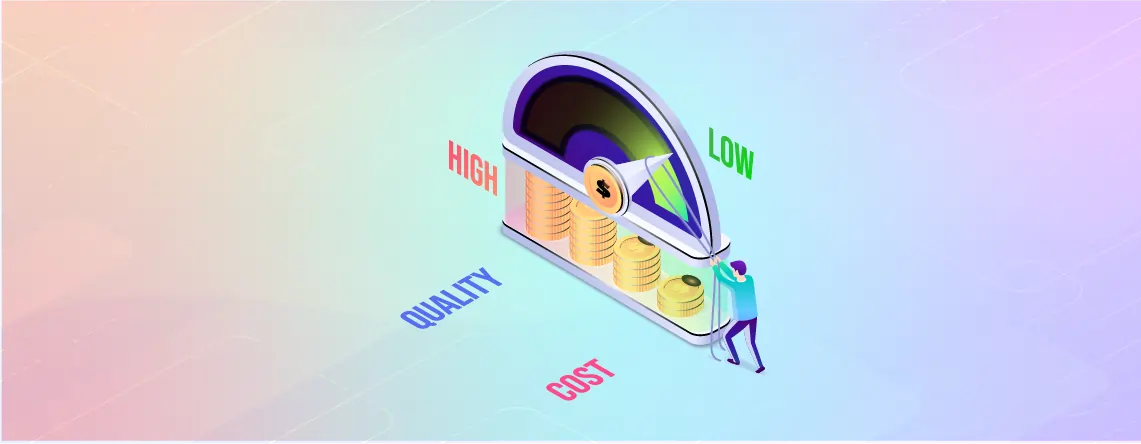

Scalability and Costs

Running advanced AI models can be resource-intensive. Tracking system utilization and optimizing infrastructure helps reduce costs without sacrificing performance.

Security Risks

Hallucinations, data leakage, or adversarial inputs pose real risks. Ongoing monitoring, red-teaming, and robust governance frameworks help mitigate threats.

Future of GenAI measurement

As generative AI continues to evolve, so too must the ways organizations measure its success. Traditional metrics like accuracy and uptime will remain important, but the future demands new dimensions of evaluation that reflect broader business, ethical, and environmental priorities.

One key area is explainability and interpretability. As AI systems make increasingly complex decisions, businesses and regulators will demand clearer visibility into how outputs are generated. Metrics that assess transparency and user trust will grow in importance.

Another emerging focus is sustainability. Training and deploying large AI models consume significant energy. Organizations will soon track carbon footprint and energy efficiency as part of responsible AI measurement, balancing innovation with environmental stewardship.

HumanAI collaboration productivity will also be central. Rather than replacing workers, many GenAI tools augment human performance. Measuring how AI enhances employee efficiency, decision-making, and creativity will highlight its real-world value.

Finally, continuous improvement frameworks, powered by real-time monitoring, user feedback loops, and adaptive retraining, will ensure AI systems remain accurate, ethical, and relevant over time.

In short, the future of AI measurement will extend beyond technical KPIs to include ethical, environmental, and collaborative metrics, ensuring AI success is defined in truly holistic terms.

Conclusion

Generative AI is no longer just an emerging technology; it is quickly becoming a cornerstone of enterprise transformation. But as powerful as it is, success cannot be assumed. Too often, organizations celebrate technical breakthroughs like higher accuracy or faster response times without asking the most critical question: Is this driving real business value?

The key to answering that question lies in measurement. A holistic KPI framework ensures that AI initiatives are not judged on isolated performance metrics but on their ability to improve customer experience, enhance efficiency, and deliver financial impact. Accuracy, latency, and reliability remain vital, but they must be paired with user-centric indicators like CSAT, adoption rates, and business-focused measures such as cost savings, productivity gains, and ROI.

At the same time, leaders must be prepared to address challenges, bias, subjectivity, cost, and security through proactive monitoring and governance. Looking ahead, new dimensions such as explainability, sustainability, and humanAI collaboration will redefine how success is measured.

Enterprises that embrace this comprehensive approach will unlock AI’s full potential not just as a tool for automation, but as a strategic driver of growth, trust, and competitive advantage. The future belongs to organizations that measure wisely, learn continuously, and adapt quickly.

Leave A Comment